Stuck in pilot - Part 0: The comfort of the sandbox

“Our AI chatbot is live- in a test environment, since … uhm… 18 months.”

Sounds familiar?

If your organization has a hallway legend about a “pilot” project that never saw daylight, you’re not alone. Across industries, AI pilots have become the business equivalent of Schrödinger’s cat: both alive and dead, depending on who’s presenting the PowerPoint. They give the illusion of innovation without requiring anyone to actually commit to change.

Let’s talk about why pilots feel safe, why that’s a trap, and why not piloting isn’t the answer either.

Pilots are easy to love

There’s something comforting about a pilot. It’s non-threatening. Limited in scope. Budget-contained. And most importantly: No one gets fired if it doesn’t work. Pilots are where good ideas go to hide from accountability.

In a sandbox, you don’t have to think about integrating with your CRM, fixing bad data, or training customer support teams. You can just build a chatbot, or drop an AI summarizer into a Power Automate flow, and feel like you’re part of the future. And hey, if it looks cool enough, maybe someone will even share it in the All Hands meeting. Yay!

Pilots without a path to production are organizational theater.

Performative innovation

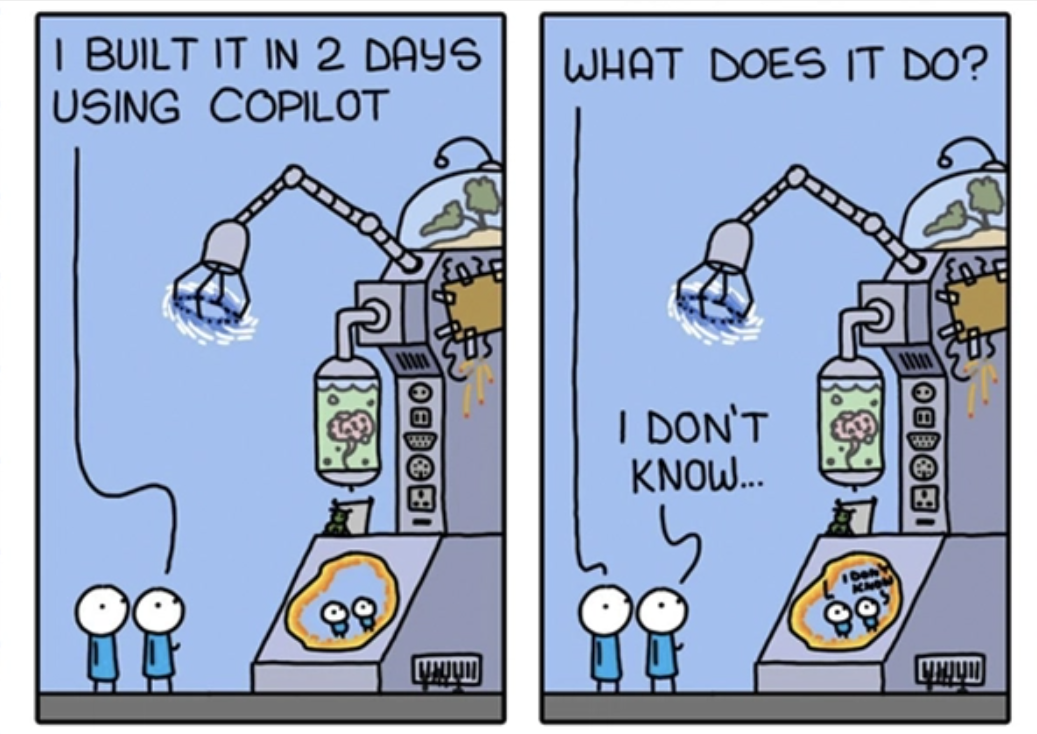

I call it pilot theater, the corporate cousin of innovation theater. It’s performative and looks like progress, but it’s just a loop of demo–hype–pause–repeat.

You spend six months “validating feasibility”, only to realize no one planned for ownership, integration, or ongoing support. So the pilot gets parked. Or worse, someone suggests starting a new pilot. One that’s even more exciting! (And just as doomed.)

In the meantime, the business still has the same inefficiencies, and same bottlenecks. But now, with the added bonus of AI fatigue.

The infamous use case list won’t save you

A common symptom of pilot theater is the infamous “AI use case list”. You gather 20, 30, maybe 50 ideas from across the company. Some are genuinely good. Others? Not so much.

But the problem isn’t the ideas, but the lack of clarity about what to do with them.

I’ve written about this in detail here, but in short: Use case lists give the illusion of progress. They help you say, “look at how much we could do!” without answering the more important question: “what are we actually ready for?”

What’s missing isn’t imagination, but prioritization, ownership, and feasibility.

Skipping pilots is worse

Let’s be clear: not piloting is not the answer either. Nobody should roll out AI to all 18,000 employees at once. That’s not bold; that’s reckless. Pilots are essential to reduce risk, test assumptions, and validate business value in a controlled environment.

But a pilot should be the start of a journey, not a loop.

A solid pilot begins with a hypothesis: a testable, grounded assumption about how AI could improve a specific business process. Maybe you believe that automating invoice routing will cut manual handling time by 50%. Or that an AI-powered summarizer will reduce response time in your support center by 15%. That hypothesis must be linked to something tangible, something you can actually measure.

Which brings us to metrics. If your pilot has no business metrics, no baseline data, and no plan for what happens if it works… congratulations, you’re not piloting. You’re procrastinating.

Pilots need an ending

One of the reasons pilots get stuck is that no one agreed on how they’d end. You need exit criteria: clear, objective statements that define what “successful”, “inconclusive”, or “not worth continuing” looks like. These should be defined before the pilot starts, not when someone asks for a status update six months in.

For example:

“We’ll consider the pilot successful if the AI reduces invoice processing time by 30%, with an error rate under 2%, and if the three-person accounting team finds it usable without additional training.”

Not only does this force clarity early on, it gives everyone a shared target. And more importantly, it avoids the trap of “it’s not bad… let’s just keep it running in test.”

Of course, exit criteria only work if they’re connected to real business metrics. And I don’t mean “number of chatbot clicks” or “hours spent coding”. I mean measurable outcomes:

- How much time was saved?

- Was manual effort reduced?

- Did we see fewer errors?

- Was customer satisfaction improved?

- Did operational throughput increase?

Start by capturing the baseline: how long does this process take today? What are users frustrated about? What’s the current failure rate?

Then, define what change would make the pilot worth scaling. Measure the delta. Track results. And make a decision. All of this should be part of your pilot planning. Not a side note, not a retrospective, but a section right at the top of your one-pager

- What are we testing?

- What does success look like?

- What will we do if we get it?

Because a good pilot starts with a hypothesis, includes a measurable outcome, and ends with a decision: scale, pivot, or stop.

Signs you’re stuck

If any of these apply, you’re probably stuck:

- Your pilot is older than a year

- There’s no rollout plan, just another “phase”

- The project is owned by IT, but not the business

- The same demo has been shown to three leadership teams

- No one can tell you what success looks like

Pilots should make people uncomfortable

Good pilots challenge something. A process. A decision. A mindset. They surface the real blockers: data quality, siloed teams, broken incentives, so that the organization can deal with them before a full rollout. If your AI pilot made everyone feel safe, it probably didn’t do much.

Let’s end with this

“If your AI pilot was never designed to leave the sandbox, it wasn’t a pilot, it was a safe place to pretend you’re changing.”

What’s next in this series

This post is part of the “Stuck in Pilot” blog series. Coming up next:

- Part 1: no foundations, no future

What your AI pilot was missing before it even started - to be published soon™️ - Part 2: fear of success

Why good pilots still don’t go live - to be published soon™️ - Part 3: your chatbot isn’t useful

When pilots fail because no one asked if the solution matters - Part 4: from stuck to scaled - to be published soon™️ A roadmap for turning a successful pilot into real business value - to be published soon™️

You May Also Like

Copilot Studio: Part 5 - From tool to capability – making Copilot Studio strategic

One bot per department isn’t a strategy but a governance problem waiting to happen. This final post shifts the focus from use cases to organizational capability. It unpacks why Copilot Studio isn’t …

Copilot Studio: Part 4 - Agents that outlive their creators – governance, risk, and the long tail of AI

The biggest AI risk isn’t what you build, but what you forget you’ve built. This post dives into what happens when Copilot Studio agents outlive their creators: orphaned logic, decaying intent, silent …

Org chart is cheap, show me the relationships

AI can read your policies, your wiki, even your org chart. But it doesn’t understand trust, context, or how decisions actually get made. In knowledge work, the invisible relationships matter most, and …