Tired of the AI hype machine

The feed vs. the floor

Open LinkedIn and you’ll see the same recycled energy over and over:

- AI will reinvent your entire industry!

- The companies that don’t adapt will die!

- Here’s how I use AI to 10x my thinking in under 3 minutes!

Then, in the comments:

- 🔥 “Incredible insight!”

- 🤝 “Exactly what I’ve been saying!”

- 🧠 “Have you tried asking ChatGPT politely?”

And so many rocket 🚀🚀🚀 emojis.

Meanwhile, in actual companies:

- Our Copilot licenses are active, but no one knows where to start

- We built a chatbot, but it just repeats things from our website

- We don’t trust the output, so we still do it manually anyway

This is the real gap:

LinkedIn is busy arguing over whether AI understands ‘please’ and ’thank you’ while orgs are still struggling to make AI useful at all.

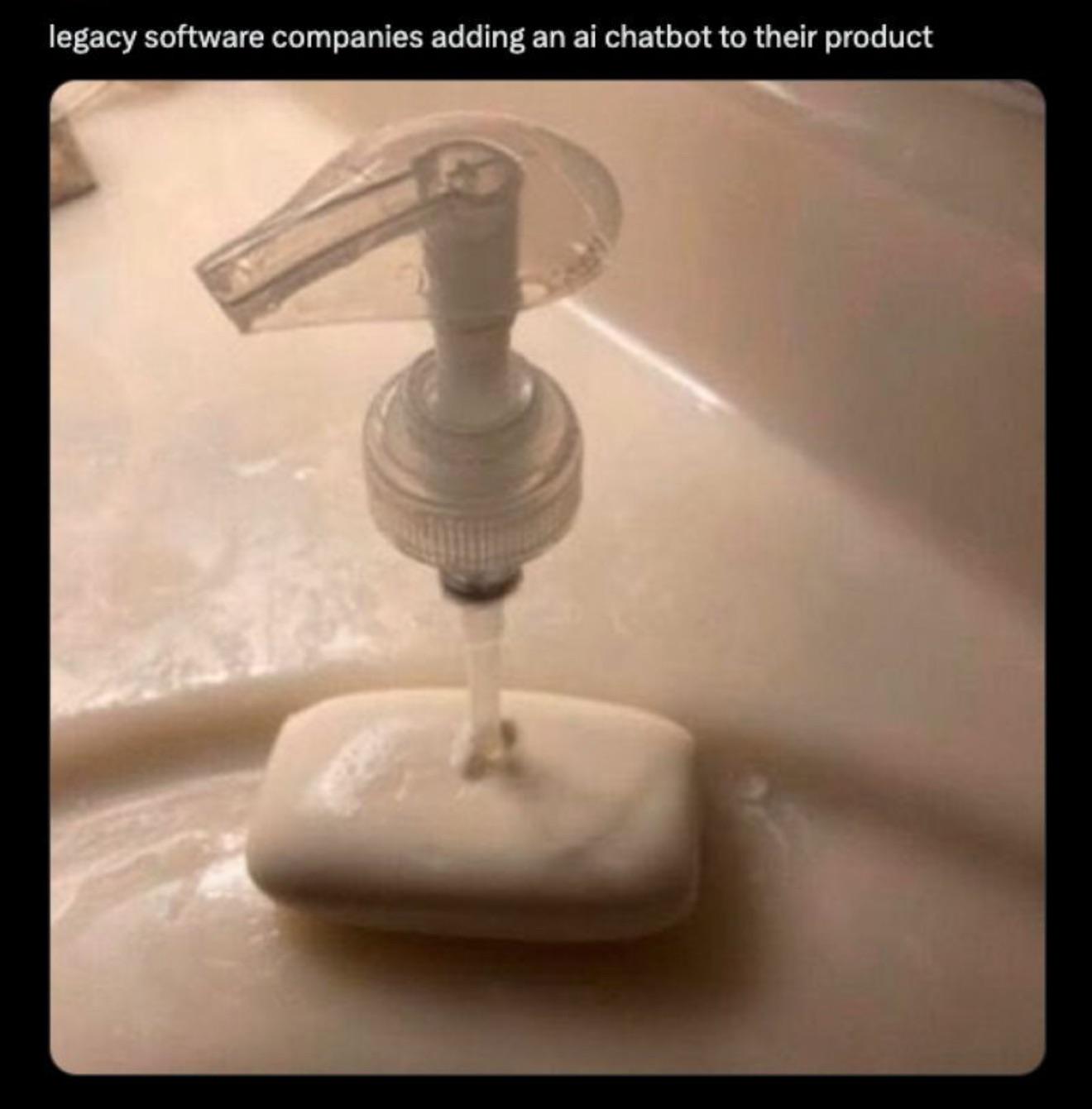

Most AI implementations right now are stuck at what I call the chatbot plateau. We’re not solving real business problems, we’re giving people a generic chat interface and calling it innovation. Instead of improving how knowledge is structured or decisions are made, we offer employees yet another vague prompt box: Ask me anything! The result? A confused user asking for the parental leave policy, only to get an outdated file, a broken link, or a non-answer dressed up in polite language. This isn’t transformation; it’s deflection. Slapping a chatbot onto an intranet doesn’t fix content chaos, broken search, or unclear ownership. It just buries it one layer deeper. And yet, this is what passes as AI integration in far too many orgs right now, because it demos well and looks futuristic, even when it delivers nothing. So while the feed celebrates another prompt tip or AI-generated image, the floor is dealing with broken systems and bigger questions.

The AI theatre is exhausting

You’ve probably seen the elephant. Someone prompts a generative model: Create an image of a group of people with no elephant in it. What do you get? A lovely picnic scene… with a giant elephant in the middle. Funny? Sure. Insightful? Maybe once. Helpful to anyone trying to automate a broken procurement process? Not even slightly.

This is the noise:

- Prompt hacks, AI-generated carousels, and ethics debates held entirely in abstract

- Thought leaders posting about their AI-first mindset from the comfort of a slide deck

- Dozens of use cases that are clever, but not valuable

And in the background, the actual AI problems remain unsolved: Data quality. Governance. Integration. User adoption. Real-world constraints.

What working AI actually looks like

The irony? AI does have real potential. But the projects that succeed don’t start with elephants, ethics debates, or tip-of-the-day posts. They start with grounded questions:

- Where is our time being wasted?

- Which decisions are based on guesswork?

- Which manual steps could be automated safely and meaningfully?

Transformation happens when teams define real problems, connect usable data, and build human-first, feedback-driven workflows and then, only then add AI where it helps.

Stop solving imaginary problems

Saying “thank you” to a language model doesn’t affect your ROI. Neither does creating a prompt that includes without an elephant. Here’s what does:

- Building use cases that reduce real friction for real users

- Understanding when automation is helpful—and when it’s just noise

- Getting past the AI-as-magic thinking, and focusing on design, intent, and structure

I’ve seen far more impactful results from AI when it’s embedded deeply into processes and not just layered on as a chatbot. In past projects, I’ve worked with teams to implement predictive maintenance using sensor data to prevent costly downtime in industrial environments. We’ve used AI-powered document intelligence to extract clauses, renewal dates, and obligations from thousands of contracts. This saved legal teams hours of manual review and triggering automated workflows in Microsoft 365. In finance, I’ve helped build models to detect anomalies in transactional data to flag potential risks long before audits catch them. HR teams I’ve worked with now use AI to identify bias in job descriptions and streamline internal mobility by matching people to roles based on skills, not job titles. I’ve also helped service teams move past basic bots and into intelligent routing, where incoming requests are prioritized and sent to the right team based on AI-driven classification and not just a keyword match.

None of these required a chat with your data interface. They required clear goals, usable data, and the right architecture. These projects don’t go viral. But they solve actual problems. That’s the kind of AI transformation I’m here for.

Final thought

I believe in the potential of AI. But I also believe most organizations are still early in the journey, despite what LinkedIn would have you believe. So if you’re not chasing the elephant or debating prompt tone, good. You’re probably closer to meaningful AI than most of the visionaries. Let’s keep doing the work. The real kind.

You May Also Like

Should I automate it?

Automation promises efficiency—but without the right context, it can quietly scale chaos. This practical (and slightly cheeky) guide walks you through when to automate, when to pause, and when to call …

From duct tape to data discipline

Spreadsheets aren’t a data strategy. Move from duct tape to discipline with trust, ownership, and product thinking.

Still running on spreadsheets: the hidden cost of outdated infrastructure

Why your most “trusted” spreadsheet might be the biggest risk in your organization — and what to do about it.